I had an email exchange with Alex, VE3NEA who is the author of the popular CW Skimmer software. Since CW Skimmer is well known for its ability to decode Morse code even in tough pile-up, noise and contest situations I was asking Alex for any advice how to improve FLDIGI CW decoder functionality. In his response Alex stated that he had worked on the problem for 8 years and tried hundreds of algorithms before he got the first version of the software that worked the way he wanted.

Alex said: "The best advice I can give you is to try to approach every problem in the Bayesian framework. Express all your prior knowledge in the form of probabilities, and use observed data to update those probabilities. For example, instead of making a hard decision at every input sample whether the signal is present or not, compute the probability that the signal is present. At a later stage you will combine that probability with other probabilities using the Bayes formula and pass the new probabilities to the subsequent stages, all the way to the word recognition unit."

Since this was written by a man who really knows how to make great Morse decoder software I started digging deeper in what this Bayesian Framework is all about. I needed to freshen up my memories about probability theory as it has been some 30+ years since I studied this at the university. Perhaps the most clear and concrete examples on conditional probabilities and how to use Bayesian Rule I found from this University of Michigan Open Textbook.

Quick Bayesian Primer

Before applying Bayesian Rule for FLDIGI Morse decoder we need to define some terms first. In the context of Morse code we are dealing with temporal elements like 'dits' and 'dahs' and time between them - these are the short and long audio pulses that Morse code characters consists of when we listen CW on ham bands. Morse decoder would have to break signals into these basic elements to figure out what character was just received. The elements and timing rules are well explained in this Wikipedia article.Bayes' theorem is named for Thomas Bayes (1701–1761), who first suggested using the theorem to update beliefs. To illustrate the Bayes Rule in ham radio context I created a simple picture below in figure 1. I collected some 'dits' and 'dahs' as well as inter-element gaps in between from noisy Morse code signals and used histogram to plot the distribution of the timing of these. The horizontal axis is in milliseconds and vertical is number of occurrences captured in this session. On the right side we see two peaks - at 60 milliseconds and at 180 milliseconds. These corresponds to 'dit' (60 ms) and 'dah' (180 ms). On the negative side I also plotted the inter-character gap - peak is at 60 milliseconds as expected and number of occurrences is roughly double as expected.

|

| Fig 1. Bayes Rule used in Morse Code decoder |

To really make use of the Bayesian Framework we need to collect these numbers to make the probability calculations on the elements. I took existing CW.CXX as basis and completely re-wrote the lower level state machine that does the heavy lifting in dealing with the above timing rules. My focus was to enable collection of these timing elements -- both signals and gaps in between. I also added some code to build statistics on these elements while the decoder is running.

I called the new state machine "edge_recorder()" and it is responsible for detecting KEY DOWN / KEY UP edges and capturing 'dit','dah' and inter-character gap durations into a timecode buffer. It also keeps statistics of these durations in a histogram for Bayesian analysis of the Morse code. GNU Scientific Library (GSL) has well documented libraries for histograms so I used GSL Histogram package.

Bayesian Classifier

I was struggling when writing the classifier software. Baysian Rule P(dit|x) = P(x|dit)*P(dit) / P(x) basically requires you to compute the probability of 'dit' given observed element "x" - this probability could then be passed to the next stage for character classification. The following diagram helped me to formulate how to approach this. We get a continuous stream of numbers representing possible 'dits' and 'dahs'. We need to calculate first a conditional probability of P(x |dit) - like the shaded intersection in figure 2.  |

| Fig 2. Conditional Probability |

We need to define what 'dit' really means. In my case I set the limits so that 'dit' duration can be anything between 0.5 * DIT and 2 * DIT where DIT is the expected duration based on the speed of the received Morse code. For example at 20 WPM (words per minute) DIT would correspond to T = 1200/WPM so 1200/20 = 60 milliseconds. Therefore in my classifier any received element "x" between 30 msec to 120 msec would be classified as 'dit'. To calculate the probability P(x|dit) I need to just look up from histogram values from all the bins between 30 and 120 and divide their total by the sum of bin values from 0 to 300. That gives us the conditional probability of P(x|dit). Same logic applies for 'dahs'. In my classifier any element "x" bigger or equal to 2 * DIT and smaller than 4 * DIT is considered a 'dah'.

Obviously if the Morse speed changes you need to collect a new histogram and re-calculate the probabilities. We have still couple additional items to deal with before we can apply Bayes rule. P(x) is the overall probability of element "x" across all occurrences. I limited this duration between 2*DIT and 4*DIT to remove outliers and impact due to noise. We can calculate P(x) from histogram like we did above. The final missing piece is P(dit). Based on my limited observations P(dit) and P(dah) have roughly equal probabilies in normal (English) ham Morse traffic so I gave them value 0.5. Now we can use the Bayes formula to calculate P(dit|x) = P(x|dit)*P(dit) / P(x). In the software I calculate probabilities P(dit|x) and P(dah|x) and pass these values to the next stage which does character matching.

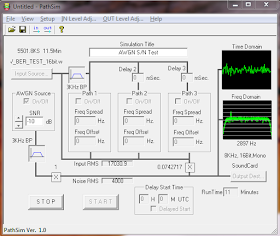

To illustrate the value of this approach please look at the following figure 3. This is a plot of the demodulated noisy signal coming from FFT filtering. It is hard even for humans to decide whether the characters below match with "I N E T" or "I R E A" or "Z N U" or something else. Humans have amazing capability to build beliefs and updating these beliefs based on observations. We are trying to emulate this human behavior. Bayesian rule is frequently used in machine learning systems.

| Figure 3. Noisy Morse code |

Character Classifier

Next step was to build a Morse character classifier that uses the probabilities coming from the previous stage as described above. To simplify the problem I created a simple codebook table that encodes the 'dit' and 'dah' probabilities of each character. Now I just need to multiply incoming number vector representing 'dit'/'dah' probabilities against each codebook entry and then find the best match.// 26 ASCII 7bit letters

{'A', "A", {{1,0},{0,1},{0,0},{0,0},{0,0},{0,0},{0,0}}},

{'B', "B", {{0,1},{1,0},{1,0},{1,0},{0,0},{0,0},{0,0}}},

{'C', "C", {{0,1},{1,0},{0,1},{1,0},{0,0},{0,0},{0,0}}},

{'D', "D", {{0,1},{1,0},{1,0},{0,0},{0,0},{0,0},{0,0}}},

{'E', "E", {{1,0},{0,0},{0,0},{0,0},{0,0},{0,0},{0,0}}},

{'F', "F", {{1,0},{1,0},{0,1},{1,0},{0,0},{0,0},{0,0}}},

{'G', "G", {{0,1},{0,1},{1,0},{0,0},{0,0},{0,0},{0,0}}},

.......

Above is a small section of the codebook. Each character has a set of two numbers - (1,0) means probability of dit is 100% and dah is 0%. Character "E" that has a single 'dit' has this in the first column. This way we can represent the characters as a sequence of probabilities of various 'dit' / 'dah' patterns.

We could also apply the Bayes Rule at this level if we collect additional data on character statistics. For example if we have statistics on the usage frequency of each character we could use that as an additional parameter to multiply the calculated probability coming from the character matching algorithm. This would just require additional frequency histogram, some code to pre-populate this based on selected language and perhaps code to update histogram when we are using the FLDIGI program. I have not implemented these yet.

Word Classifier

The last stage would be the word classifier. As we calculate the probabilities of each character in the previous stage we could send these probabilities upstream to the word classifier. There are a commonly used phrases, acronyms and ham radio jargon that could be used as a corpus to match against received characters.For example if we receive BALAN where the possible 4th letter in order of probabilities are A = 0.2345, U = 0.2340, V=0.1234, R=0.10235 we could use Bayes Rule to figure out probability of BALUN vs. BALAN and emit the most probably word. Similarly we could use a database of contest ham stations and try to match probabilities of received characters to most probably station call sign using Bayes Rule. This is yet another area that would be nice to implement in the future.

Conclusions

I want to thank Alex VE3NEA for opening a whole new avenue of learning about Mr. Bayes and his ideas. Based on my initial results the Bayesian Framework provides new rich data on how to model and improve the performance of the FLDIGI Morse Decoder.The new software I created produces a ton of useful data to analyze what is really happening under extremely noisy conditions and also helps to separate different problems like signal processing & filtering, signal power detection, signal edge detection, classification of 'dits' and 'dahs' and finally also character and word classification.

I have a alpha quality version of Bayesian classifier coded for FLDIGI v.3.21.64 and I will work with Dave W1HKJ and any volunteers to use these ideas to improve FLDIGI Morse decoder even further.

You can leave comments here or you can also find me from linuxham Yahoo mailing list or from http://eham.net

73

Mauri AG1LE